Structure from motion & NeRF

Developed a multi-view 3D reconstruction pipeline and implemented Neural Radiance Fields (NeRF) for photorealistic rendering.

Hi 👋, I’m a Robotics Engineer at Amazon Robotics working on multimodal sensing for robots in unstructured environments.

I recently completed my M.S. in Robotics Engineering at Worcester Polytechnic Institute (WPI) [ May 2024 ], where I worked on context-aware dexterous manipulation, advised by Prof. Berk Calli. You can find my thesis here! This research was sponsored by Amazon Robotics Greater Boston Tech Initiative grant.

My long-term goal is to create robotic systems that operate robustly in complex, unstructured, and partially observable environments by combining rich context awareness with human-aligned behavior. When I’m not tinkering with cameras on robots, you can find me playing with my own camera, exploring photography techniques.

Leveraging Dexterous Picking Skills for Complex Multi-Object Scenes

2024 IEEE-RAS International Conference on Humanoid Robots (Humanoids), Nancy, France, 2024

TLDR: We developed a deep learning-based framework leveraging four human-inspired dexterous picking skills for robust robotic manipulation in complex, cluttered multi-object scenarios, validated through real-world experiments.

ICMLDE (Springer), 2022

TLDR: We learn a thermal‑to‑RGB colorization model and show that colorized inputs can improve downstream pedestrian detection versus grayscale thermal baselines.

Qualitative Colorization of Thermal Infrared Images using custom CNNs

IEEE DELCON, 2022

TLDR: We design lightweight CNNs for LWIR image colorization and evaluate perceptual quality, producing more informative visuals for scene understanding.

Rugby ball detection, tracking and future trajectory prediction algorithm

Springer LNEE, 2021

TLDR: A detection‑tracking‑prediction pipeline locates a rugby ball in video and forecasts its short‑term trajectory using simple motion models.

Optimized detection, classification and tracking with YOLOv5, HSV color thresholding and KCF

Springer LNEE, 2021

TLDR: We integrate YOLOv5 with HSV color filtering and KCF tracking to boost frame‑to‑frame consistency and real‑time performance for multi‑class tracking.

Developed a multi-view 3D reconstruction pipeline and implemented Neural Radiance Fields (NeRF) for photorealistic rendering.

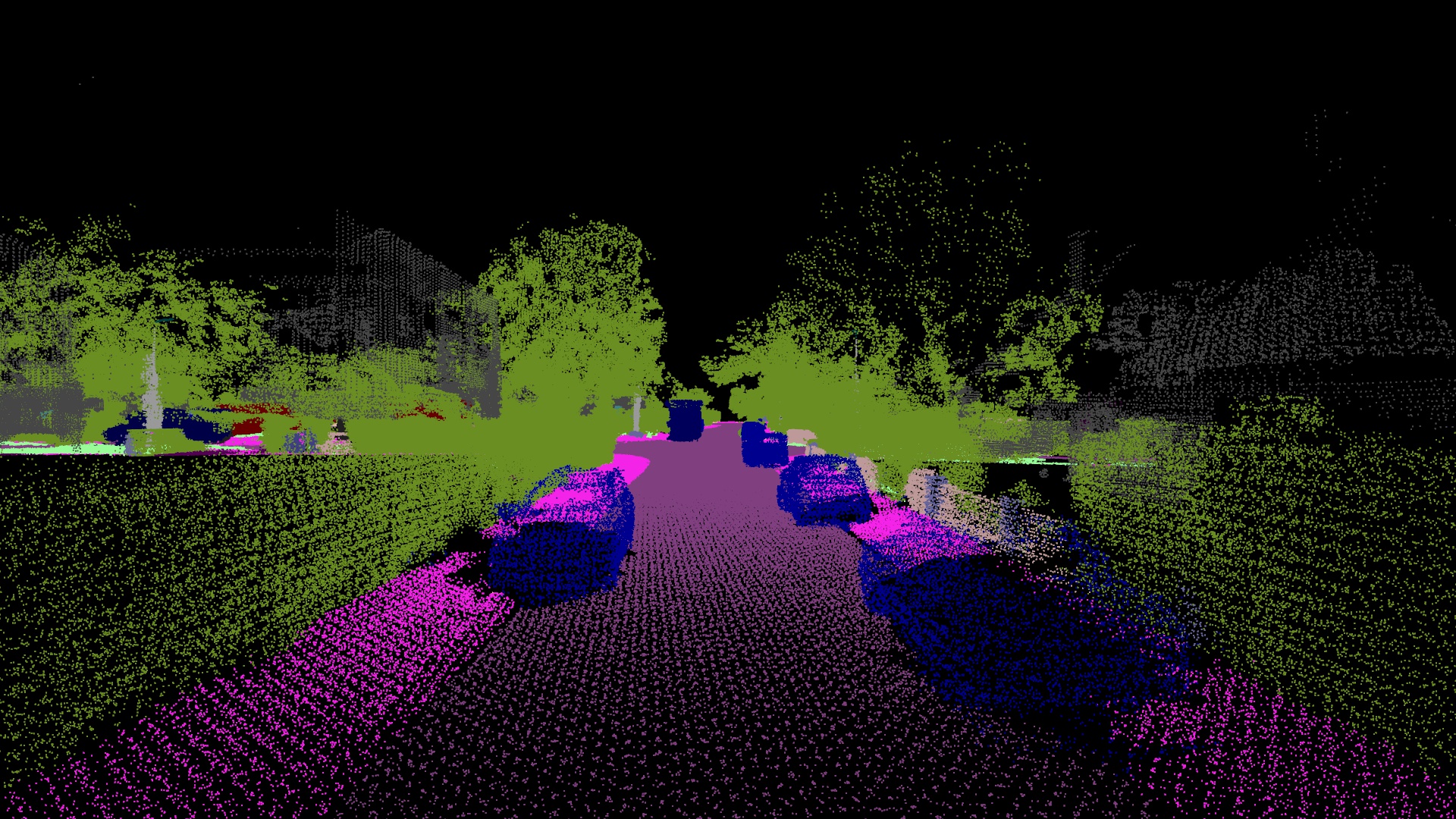

Stitched LiDAR point clouds and performed semantic segmentation, projecting labels onto merged 3D data.

Transformed audio to spectrograms and studied which transforms work best as features for downstream transcription.

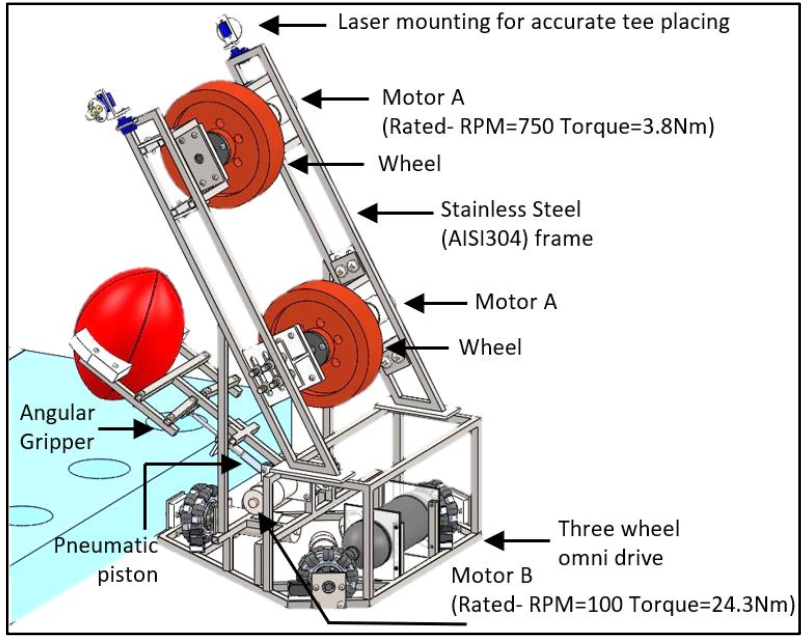

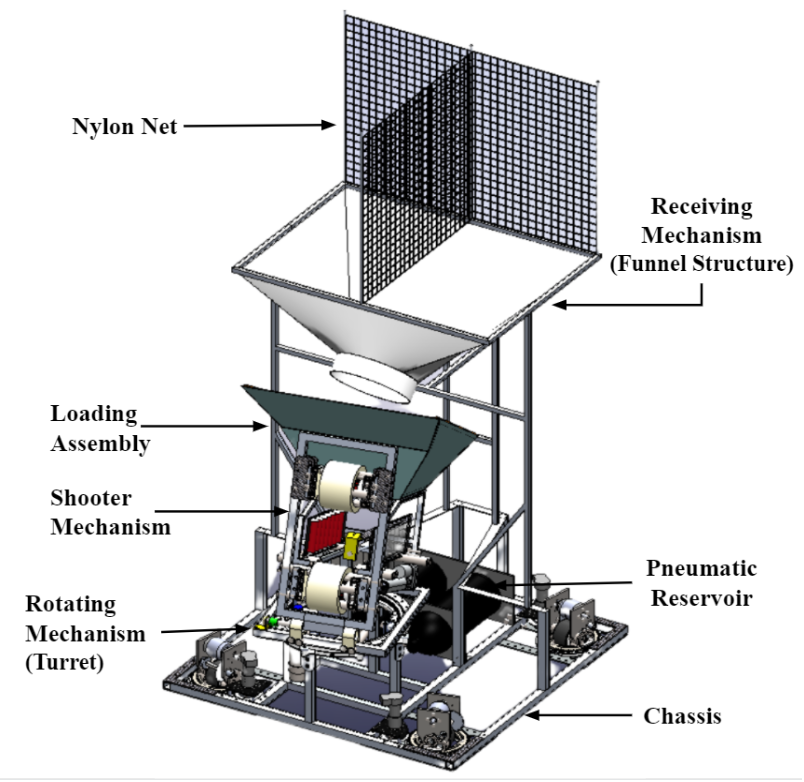

Engineered a high-agility swerve-drive robot with a flywheel-based projectile mechanism and pneumatic autoloader for ball throwing task.

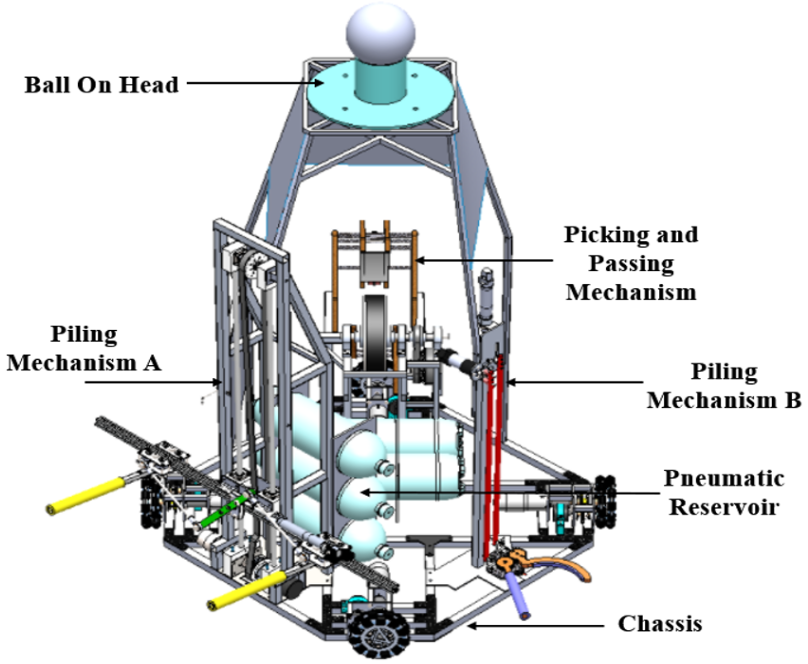

Developed an omni-drive mobile manipulator with a dual slider-crank parallel gripper for autonomous object detection and precision stacking.

Implemented a synchronized ball transfer system using a roller-flywheel mechanism for robust, high-speed handoffs between mobile robots.

Colorization + pedestrian detection (YOLOv5 + CNN). Trained on CAMEL; evaluated with RMSE & qualitative and quantitative comparisons.

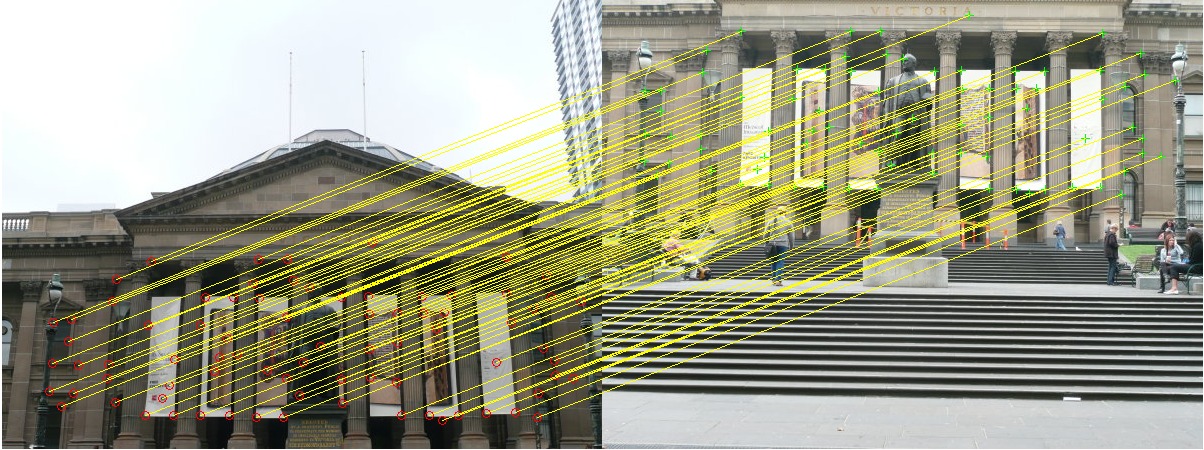

ANMS, feature matching, RANSAC, and homography‑based warping; plus a deep‑learning variant on MS‑COCO.

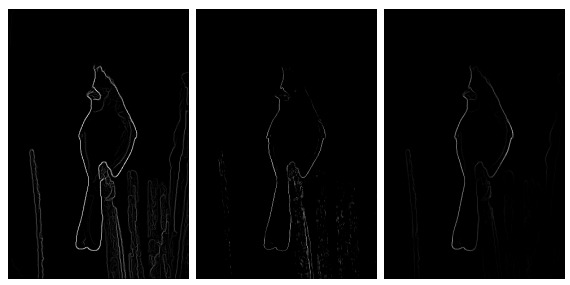

Combined texton, brightness, and color cues with oriented filters; compared to Canny/Sobel baselines.